How do I perform an analysis of a test completed by students?

A statistical analysis can be performed after all students have completed a test, helping you to improve the assessment for the next time, or to adjust the score of current attempts.

The Test Item Analysis tool provides statistics on overall test performance and individual test questions (notably item difficulty and item discrimination) to help recognise questions that might be poor discriminators of student performance. It is intended to provide you with information that you might use to improve questions for future use or adjust current attempts, by either eliminating weak questions or remedying errors or ambiguity in those questions. Analyses can be run on deployed tests, including those comprised of random blocks.

Item difficulty is the percentage or proportion of students correctly answering the question (p-value). Essentially to determine the difficulty of individual questions, divide the number of students who answered that question correctly by the total number of students who answered the question and multiply by 100. The higher the difficulty index or the larger the percentage getting a question right, the easier the question is understood to be.

Item discrimination measures the effectiveness of an individual question to ‘discriminate’ between stronger and weaker performance overall on the test. The general notion is how much a particular question is an indicator of overall performance for students. A question which all students get correct should get a low or negative discrimination rating. There are a number of formulae for calculating discrimination and discrimination scores range from -1 to +1. Scores of above +0.2 indicate a good discrimination, while negative scores indicate a poor discrimination.

The Test Item Analysis tool can be accessed from the contextual menu (by clicking the chevron ) against:

- A test deployed in a content area

- A deployed test listed on the Tests page

- A Grade Centre column for a test

Read this article for full details of using and interpreting the Test Item Analysis

You can select which test you want to run the item analysis against (or view previous runs of Analyses – this is useful if you wish to compare results, say, to a test taken at the beginning of a module and then again for the same test at the end of the module).

If run on tests with manually graded questions (i.e. Essay, Short Answer, File Response) that have not yet been assigned scores, statistics are generated for only the auto-scored questions. The Test Item Analysis can be run again once the questions have been graded.

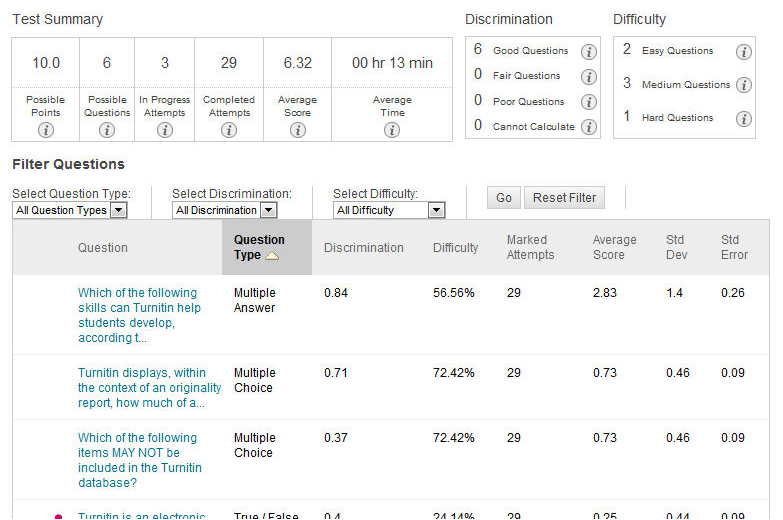

The analysis shows:

- A test summary – points possible, the number of possible questions, the number of attempts in progress, the number of completed attempts, the average score and average time taken to complete the test.

- Discrimination data – the number of good, fair, and poor questions.

- Difficulty data – the number of easy, medium, and hard questions.

- Data about each question – the text of the question itself, question type, its discrimination index, its difficulty index, its average score and its standard deviation. Questions can be filtered by question type, their discrimination (i.e. good, fair or poor), or their difficulty (i.e. easy, medium or hard). By clicking on each question, you can see the breakdown of the number of students in each quartile against their responses to that question.

- Questions recommended for review are flagged, and the test itself can be edited from the link within the page.